Fine-Tuning LLMs: A Practical Guide to Enhanced Performance

Large Language Models (LLMs) have revolutionized natural language processing, but their out-of-the-box performance often falls short for specialized tasks. Fine-tuning, the process of further training a pre-trained LLM on a smaller, task-specific dataset, is the key to unlocking their full potential. This tutorial provides a comprehensive, up-to-date guide to fine-tuning LLMs, covering the latest techniques, frameworks, and strategies to optimize performance for your specific needs.

1. Understanding the Landscape of LLM Fine-Tuning

a close up of a typewriter with a paper that says lifelong learning

The world of LLMs is constantly evolving. Staying current with the latest models, frameworks, and fine-tuning techniques is crucial for success. The shift towards more accessible and customizable models has democratized AI, making fine-tuning an essential skill.

Recent Developments in LLMs

- Model Architectures: The landscape is shifting from decoder-only architectures (like GPT) to include encoder-decoder architectures (like T5) and mixture-of-experts models. Understanding these nuances is key to choosing the right base model.

- Open Source Initiatives: The increasing availability of open-source LLMs (e.g., Llama 2, Mistral AI) empowers developers to experiment and fine-tune without relying solely on proprietary APIs. This shift fosters innovation and allows for greater control over model behavior.

- Instruction Tuning: Training models to follow human instructions has become a standard practice. This involves using datasets of prompts and desired responses to align the model's behavior with user expectations.

Why Fine-Tune an LLM?

- Improved Accuracy: Fine-tuning significantly boosts accuracy on target tasks compared to zero-shot or few-shot prompting.

- Reduced Hallucinations: Task-specific training helps ground the model in reality and reduces the likelihood of generating factually incorrect or nonsensical outputs.

- Controlled Behavior: Fine-tuning allows you to shape the model's style, tone, and overall behavior to match your desired application.

- Increased Efficiency: A fine-tuned model can achieve similar or better performance with fewer parameters and lower inference costs compared to a larger, general-purpose model.

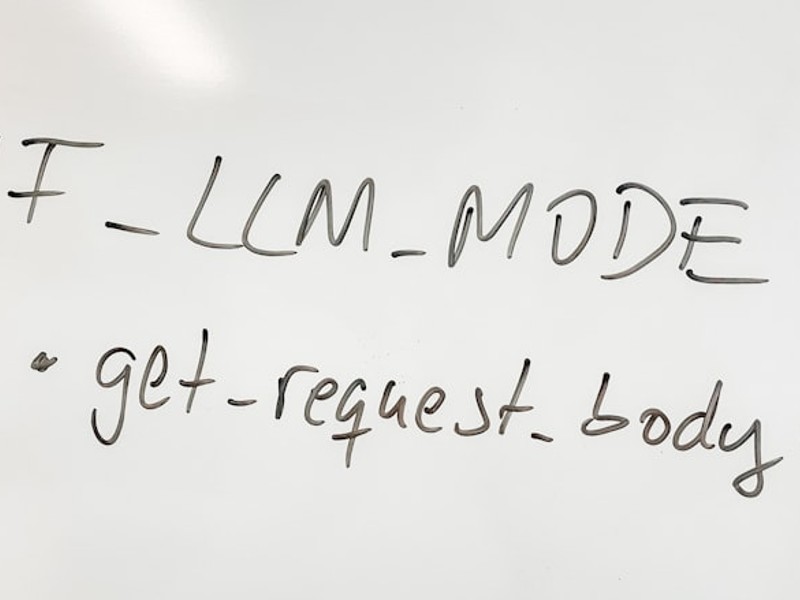

2. Preparing Your Data for Fine-Tuning

a white board with writing written on it

Data quality and preparation are paramount for successful fine-tuning. Garbage in, garbage out applies here more than ever.

Data Collection and Annotation

- Gather Relevant Data: The dataset should be representative of the target task and contain a sufficient number of examples. Consider using data augmentation techniques to increase dataset size.

- High-Quality Annotations: Ensure that the data is accurately and consistently annotated. Use professional annotators or implement rigorous quality control measures.

- Data Privacy and Security: Adhere to data privacy regulations and implement appropriate security measures to protect sensitive information.

Data Cleaning and Preprocessing

- Remove Noise and Inconsistencies: Clean the data by removing irrelevant information, duplicates, and errors.

- Tokenization and Encoding: Convert the text data into numerical representations that the model can understand. Use the same tokenizer as the pre-trained LLM.

- Data Splitting: Divide the data into training, validation, and testing sets. The validation set is used to monitor the model's performance during training, and the testing set is used to evaluate the final model.

3. Selecting the Right LLM for Your Task

a computer generated image of a human brain

The choice of the base LLM is a critical decision. Consider factors such as model size, architecture, pre-training data, and licensing terms. Remember the latest models offer better performance, but may require more computational resources.

Open-Source vs. Proprietary Models

- Open-Source Models (e.g., Llama 2, Mistral AI, Falcon): Offer greater flexibility, transparency, and control. They are often more cost-effective and allow for customization.

- Proprietary Models (e.g., GPT-4, Claude): Generally offer state-of-the-art performance but come with higher costs and licensing restrictions. They often provide managed APIs, simplifying deployment.

Evaluating Model Performance

- Benchmark Datasets: Use publicly available benchmark datasets to evaluate the performance of different LLMs on your target task.

- Zero-Shot and Few-Shot Evaluation: Assess the model's performance without any fine-tuning (zero-shot) or with a small number of examples (few-shot) to get a baseline understanding.

- Consider Resource Constraints: Balance performance with computational resources and budget limitations.

4. Fine-Tuning Techniques: A Deep Dive

Several fine-tuning techniques exist, each with its own advantages and disadvantages. Choosing the right technique depends on your specific needs and resources.

Full Fine-Tuning

- Update All Parameters: This involves updating all the parameters of the pre-trained LLM. It typically yields the best performance but requires significant computational resources and memory.

- Suitable for Large Datasets: Full fine-tuning is most effective when you have a large dataset that is representative of the target task.

Parameter-Efficient Fine-Tuning (PEFT)

PEFT methods address the high computational cost of full fine-tuning by only updating a small subset of model parameters. This is a major trend in LLM fine-tuning.

- Low-Rank Adaptation (LoRA): Adds low-rank matrices to the existing weights of the LLM and only trains these low-rank matrices. This significantly reduces the number of trainable parameters.

- Prefix Tuning: Adds a small trainable prefix to the input sequence. This allows the model to adapt to the target task without modifying the original LLM weights.

- Prompt Tuning: Optimizes a learnable prompt that is prepended to the input sequence. This is a lightweight alternative to full fine-tuning.

- QLoRA: Quantizes the pre-trained language model to 4-bit precision, then adds small, trainable Low-Rank Adapter layers. This dramatically reduces memory usage, enabling fine-tuning of large models on a single GPU.

Recent Advances in PEFT

- AdapterFusion: Combines multiple adapters trained on different tasks to create a more robust and versatile model.

- UniPELT: A unified framework for PEFT that allows you to combine different PEFT techniques.

5. Frameworks and Tools for Fine-Tuning

Several frameworks and tools simplify the fine-tuning process and provide pre-built functionalities. Keeping up with the latest versions and features is essential.

Popular Frameworks

- Hugging Face Transformers: A comprehensive library that provides pre-trained models, tokenizers, and training scripts. It supports a wide range of LLMs and fine-tuning techniques. Stay updated with the latest release notes for new features and bug fixes.

- PyTorch Lightning: A lightweight wrapper around PyTorch that simplifies the training process and provides features such as automatic mixed precision and distributed training.

- TensorFlow/Keras: Another popular deep learning framework that offers a wide range of tools and resources for fine-tuning LLMs.

Cloud-Based Platforms

- Google Cloud Vertex AI: Provides a managed platform for training and deploying LLMs. It offers features such as automatic hyperparameter tuning and model monitoring.

- Amazon SageMaker: A similar platform offered by Amazon Web Services (AWS). It provides a wide range of tools and services for machine learning.

- Microsoft Azure Machine Learning: Microsoft's cloud-based machine learning platform that offers similar functionalities.

6. Monitoring and Evaluating Fine-Tuned Models

Monitoring and evaluating the performance of your fine-tuned model is crucial for identifying potential issues and optimizing performance. Robust evaluation metrics are essential.

Key Metrics

- Accuracy: The percentage of correct predictions.

- Precision: The proportion of correctly identified positive cases out of all cases identified as positive.

- Recall: The proportion of correctly identified positive cases out of all actual positive cases.

- F1-Score: The harmonic mean of precision and recall.

- BLEU Score: A metric for evaluating the quality of machine-translated text.

- ROUGE Score: A metric for evaluating the quality of text summarization.

- Perplexity: A measure of how well a language model predicts a sequence of words. Lower perplexity indicates better performance.

Monitoring Techniques

- Training Curves: Monitor the training and validation loss to identify overfitting or underfitting.

- Confusion Matrix: Visualize the model's performance on different classes.

- Error Analysis: Analyze the model's errors to identify patterns and areas for improvement.

7. Deployment and Inference Optimization

Deploying your fine-tuned LLM and optimizing inference speed are critical for real-world applications. Efficient inference is key to scalability.

Deployment Options

- Cloud-Based APIs: Deploy the model as a REST API using cloud-based platforms such as Google Cloud Vertex AI, Amazon SageMaker, or Microsoft Azure Machine Learning.

- Serverless Functions: Deploy the model as a serverless function using platforms such as AWS Lambda or Google Cloud Functions.

- On-Premise Deployment: Deploy the model on your own servers.

Inference Optimization Techniques

- Quantization: Reduce the size of the model by quantizing the weights to lower precision (e.g., 8-bit or 4-bit). This can significantly improve inference speed.

- Pruning: Remove unnecessary connections from the model to reduce its size and complexity.

- Knowledge Distillation: Train a smaller, faster model to mimic the behavior of the larger, fine-tuned model.

- TensorRT: NVIDIA's TensorRT is a high-performance inference optimizer and runtime for deep learning applications.

8. Addressing Challenges and Future Trends

Fine-tuning LLMs is not without its challenges. Addressing these challenges and staying ahead of future trends is crucial for long-term success.

Current Challenges

- Data Scarcity: Obtaining sufficient high-quality data for fine-tuning can be challenging, especially for specialized tasks. Data augmentation and synthetic data generation techniques can help mitigate this issue.

- Catastrophic Forgetting: Fine-tuning can sometimes lead to the model forgetting its pre-trained knowledge. Regularization techniques and continual learning strategies can help prevent this.

- Bias and Fairness: LLMs can inherit biases from their pre-training data. It is important to carefully evaluate the model for bias and implement mitigation strategies.

Future Trends

- Continual Learning: Developing techniques that allow LLMs to continuously learn and adapt to new data without forgetting previous knowledge.

- Multi-Task Learning: Training LLMs on multiple tasks simultaneously to improve generalization and efficiency.

- Explainable AI (XAI): Developing techniques that allow us to understand and interpret the decisions made by LLMs.

- Edge Computing: Deploying LLMs on edge devices to reduce latency and improve privacy.

Conclusion

Fine-tuning LLMs is a powerful technique for unlocking their full potential and tailoring them to specific tasks. By staying current with the latest models, frameworks, and techniques, you can achieve unparalleled performance and create innovative applications. Embrace the evolving landscape, experiment with different approaches, and continuously monitor and evaluate your models to achieve optimal results. Start fine-tuning your LLMs today and unlock the future of natural language processing!

FAQ

Q: What are the biggest changes in LLM fine-tuning recently?

A: The most significant changes are the rise of Parameter-Efficient Fine-Tuning (PEFT) methods like LoRA and QLoRA, making it possible to fine-tune much larger models on consumer hardware. Also, the increasing availability of open-source models gives more control and customization options.

Q: How much data do I need for fine-tuning?

A: The amount of data needed depends on the complexity of the task and the size of the LLM. While full fine-tuning benefits from larger datasets (thousands or tens of thousands of examples), PEFT methods can often achieve good results with smaller datasets (hundreds or thousands of examples).

Q: What hardware is required for fine-tuning LLMs?

A: Hardware requirements vary depending on the model size and fine-tuning technique. Full fine-tuning of large models typically requires GPUs with significant memory (e.g., NVIDIA A100 or H100). PEFT methods, especially QLoRA, can enable fine-tuning on consumer-grade GPUs with 16-24 GB of VRAM.

Q: How do I choose the right evaluation metrics for my fine-tuned model?

A: The choice of evaluation metrics depends on the nature of the task. For classification tasks, accuracy, precision, recall, and F1-score are commonly used. For text generation tasks, BLEU and ROUGE scores are often used. Perplexity is a general metric for evaluating language model performance.

Q: What are the ethical considerations when fine-tuning LLMs?

A: It is crucial to be aware of potential biases in the training data and to evaluate the model for bias and fairness. Consider the potential impact of the model's outputs on different groups of people and implement mitigation strategies to address any identified biases. Transparency and accountability are also important ethical considerations.